Improving OOD Generalization Through Metric-informed Weight-space Augmentation and Architecture Search

ABOUT THIS PROJECT

At a glance

Out-of-distribution (OOD) robustness means making reliable predictions, even if there is a difference between training and testing distributions; and achieving OOD robustness is a topic of increasing attention, due to its practical implications on deploying deep learning models reliably in unknown environments.

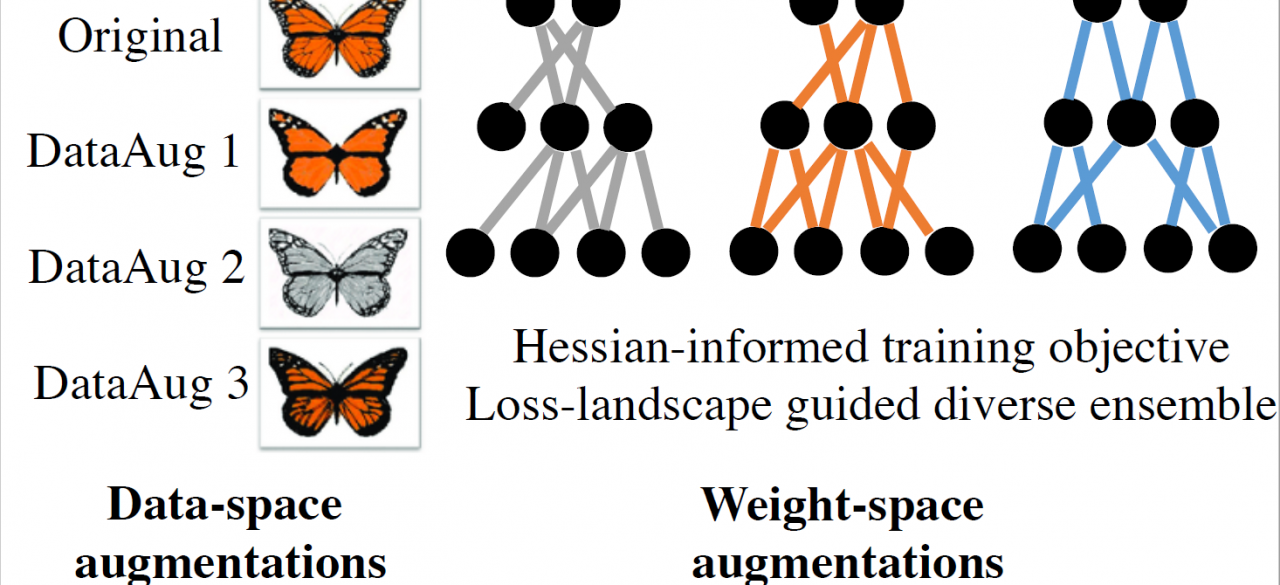

The common approach to improve OOD robustness relies on strong data augmentations to make models immune to data-space perturbations. These perturbations are used to simulate potential changes in the testing distributions.

This proposal explores novel weight-space augmentations to improve the model's robustness to weight-space perturbations. We propose novel training objectives and ensemble learning methods for improved weight-space robustness by exploiting our strength in Hessian-based loss landscape analysis. We also propose metrics that can be used to measure the global structure of loss landscapes, beyond local Hessian-based methods, and we will use those to improve our training and robustness performance.

| principal investigators | researchers | themes |

|---|---|---|

OOD Generalization, Loss landscape, Ensemble learning |

This project continues the work of the completed project, Measuring Prediction Trustworthiness and Safety Through Neural Network Loss Landscape Analysis.